What is Data Engineering

What is Data Engineering?

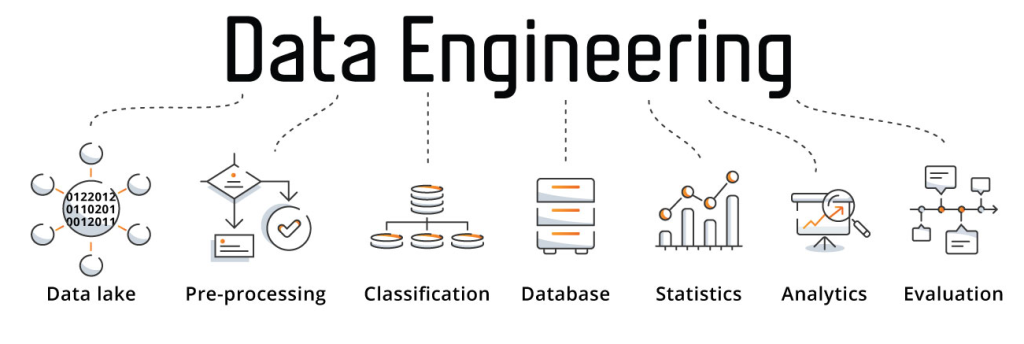

Data Engineering is the practice of designing, building, and maintaining the infrastructure that enables organizations to store, process, and analyze large volumes of data. It involves a set of technologies and practices that are used to create, manage, and maintain the data architecture of an organization.

Data Engineers are responsible for the development, maintenance, and optimization of data pipelines, data warehouses, and other data-related infrastructure. They work with large amounts of data, often in real-time, and are responsible for ensuring that the data is accurate, secure, and readily available for analysis.

Some of the key skills required for a Data Engineer include expertise in programming languages such as Python, Java, and SQL, as well as knowledge of distributed systems, cloud computing, and database design. They may also be familiar with big data frameworks such as Hadoop, Spark, and Kafka.

Who is a Data Engineer?

A Data Engineer is a professional who designs, develops, and maintains the infrastructure that enables an organization to process, store, and analyze large amounts of data. They are responsible for building and managing data pipelines, data warehouses, and other data-related systems that ensure that the data is accurate, secure, and readily available for analysis.

Data Engineers work closely with Data Scientists and other members of the data team to ensure that the organization’s data architecture is optimized for the organization’s needs. They also work with stakeholders across the organization to understand their data requirements and ensure that the data is available and accessible for analysis.

Data Engineers typically have expertise in programming languages such as Python, Java, and SQL, as well as knowledge of distributed systems, cloud computing, and database design. They may also be familiar with big data frameworks such as Hadoop, Spark, and Kafka.

How to become a Data Engineer?

There are several steps you can take to become a Data Engineer:

Becoming a Data Engineer requires a combination of education, technical skills, and practical experience. Here are some general steps that can help you become a Data Engineer:

- Education: A bachelor’s or master’s degree in Computer Science, Computer Engineering, Data Science, or a related field is typically required. Some employers may also consider candidates with degrees in Mathematics, Physics, or other quantitative fields.

- Technical Skills: Data Engineers need strong technical skills in programming languages such as Python, Java, and SQL, as well as knowledge of distributed systems, cloud computing, and database design. It’s also helpful to have experience with big data frameworks such as Hadoop, Spark, and Kafka.

- Practical Experience: Gaining practical experience in Data Engineering is critical. One way to gain experience is by working on personal projects or contributing to open-source projects. Another way is to complete internships or work on Data Engineering projects as part of your coursework.

- Certifications: Obtaining industry certifications such as AWS Certified Big Data – Specialty, Cloudera Certified Data Engineer, or Google Cloud Certified Professional Data Engineer can also help demonstrate your skills and knowledge in Data Engineering.

- Continuous Learning: Data Engineering is a constantly evolving field, so it’s important to stay up-to-date with new technologies and trends. This can be done through attending conferences, participating in online forums and communities, or taking courses and workshops.

becoming a Data Engineer requires a combination of education, technical skills, practical experience, certifications, and continuous learning. It’s a challenging but rewarding career that is in high demand, and the demand for skilled Data Engineers is expected to continue to grow in the future.

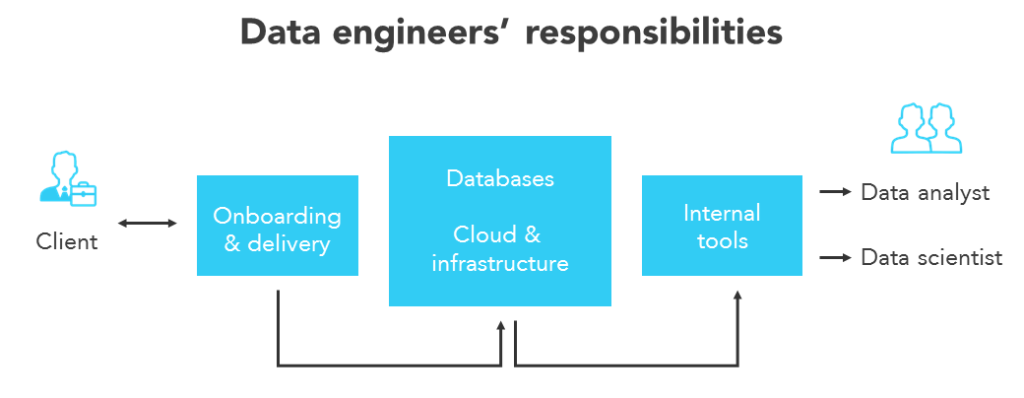

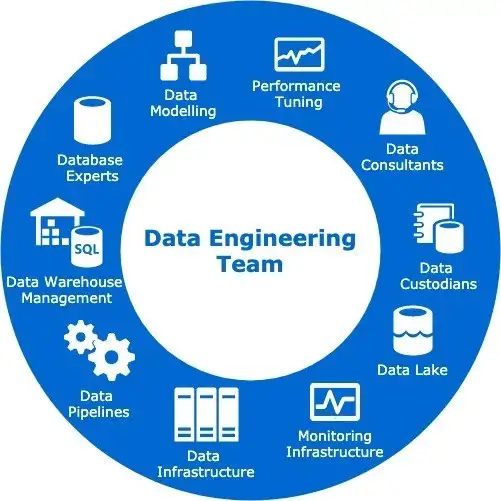

What are the Roles and Responsibilities of a Data Engineer?

The roles and responsibilities of a Data Engineer typically include:

- Designing and developing data pipelines: This involves designing and implementing data pipelines that move data from various sources into a central data repository for analysis.

- Building and managing data warehouses: This includes designing, building, and maintaining data warehouses that store large volumes of data and are optimized for fast querying.

- Developing and maintaining data infrastructure: This involves developing and maintaining the infrastructure that enables an organization to process, store, and analyze large amounts of data.

- Ensuring data quality and integrity: Data Engineers are responsible for ensuring that the data is accurate, complete, and consistent.

- Ensuring data security and privacy: This includes implementing security measures to protect data from unauthorized access, as well as ensuring compliance with data privacy regulations.

- Collaborating with data scientists and analysts: Data Engineers work closely with Data Scientists and other members of the data team to understand their data requirements and ensure that the data is available and accessible for analysis.

- Monitoring and optimizing data performance: This involves monitoring the performance of data systems and optimizing them for maximum efficiency and scalability.

Overall, the role of a Data Engineer is critical in ensuring that an organization’s data is properly managed, secured, and made available for analysis, which is essential for enabling data-driven decision-making and achieving business success.

Here are some examples of common data engineering tasks:

Here are some examples of common data engineering tasks:

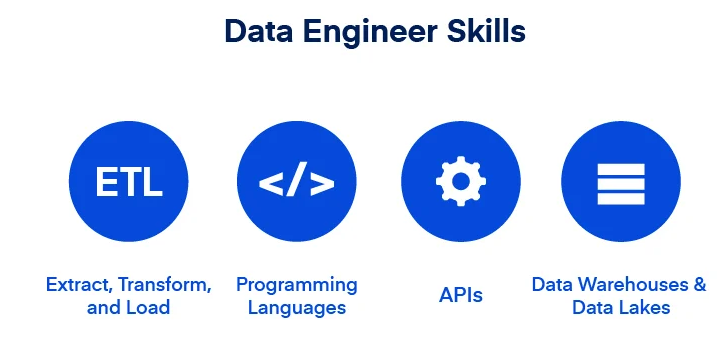

- Extract, Transform, Load (ETL) pipelines: Data Engineers design and implement ETL pipelines to move data from various sources into a central data repository for analysis. This involves extracting data from different sources, transforming it into a common format, and loading it into a target system.

- Data warehousing: Data Engineers build and maintain data warehouses, which are used to store large volumes of data and are optimized for fast querying. This involves designing the schema, selecting the appropriate data storage and retrieval technologies, and managing the data warehouse’s performance.

- Data modeling: Data Engineers create data models that represent the data stored in databases and data warehouses. This involves defining the structure of the data, the relationships between the data, and the rules for data validation.

- Database management: Data Engineers manage databases, which involves designing and maintaining the database schema, optimizing database performance, and ensuring data integrity and security.

- Cloud computing: Data Engineers work with cloud computing technologies to design, implement, and manage data infrastructure on cloud platforms such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

- Data quality and governance: Data Engineers are responsible for ensuring data quality and governance, which involves developing and implementing processes to ensure data accuracy, completeness, consistency, and privacy.

- Real-time data processing: Data Engineers design and implement real-time data processing systems that can process and analyze data as it is generated. This involves selecting appropriate technologies for streaming data processing, such as Apache Kafka, Apache Flink, or Apache Spark Streaming.

Coding examples for these tasks may include:

Here are some coding examples for common data engineering tasks:

- ETL pipelines:

import pandas as pd

from sqlalchemy import create_engine

# Connect to source database

source_engine = create_engine('postgresql://username:password@host/database')

# Extract data from source table

source_data = pd.read_sql_table('source_table', source_engine)

# Transform data

transformed_data = source_data.dropna()

# Connect to target database

target_engine = create_engine('postgresql://username:password@host/database')

# Load data into target table

transformed_data.to_sql('target_table', target_engine, if_exists='replace')

- Data warehousing:

import snowflake.connector

# Connect to Snowflake

conn = snowflake.connector.connect(

user='<user>',

password='<password>',

account='<account>',

warehouse='<warehouse>',

database='<database>',

schema='<schema>'

)

# Create a new table in Snowflake

cur = conn.cursor()

cur.execute("""

CREATE TABLE my_table (

id INTEGER,

name VARCHAR(255),

age INTEGER

)

""")

# Load data into Snowflake

data = [(1, 'John', 30), (2, 'Jane', 25), (3, 'Bob', 40)]

cur.executemany("INSERT INTO my_table (id, name, age) VALUES (%s, %s, %s)", data)

conn.commit()

- Data modeling:

from sqlalchemy import Column, Integer, String from sqlalchemy.ext.declarative import declarative_base Base = declarative_base() class MyTable(Base): __tablename__ = 'my_table' id = Column(Integer, primary_key=True) name = Column(String(255)) age = Column(Integer)

- Database management:

import psycopg2

# Connect to PostgreSQL

conn = psycopg2.connect(

host='<host>',

database='<database>',

user='<user>',

password='<password>'

)

# Create a new table in PostgreSQL

cur = conn.cursor()

cur.execute("""

CREATE TABLE my_table (

id SERIAL PRIMARY KEY,

name VARCHAR(255),

age INTEGER

)

""")

conn.commit()

- Cloud computing:

import boto3

# Connect to AWS S3

s3 = boto3.client('s3',

aws_access_key_id='<aws_access_key_id>',

aws_secret_access_key='<aws_secret_access_key>'

)

# Upload a file to S3

with open('my_file.txt', 'rb') as f:

s3.upload_fileobj(f, '<bucket_name>', 'my_file.txt')

- Data quality and governance:

import pandas as pd

# Load data into a DataFrame

data = pd.read_csv('my_data.csv')

# Check for missing values

if data.isnull().values.any():

raise ValueError('Missing values detected')

# Check for duplicates

if data.duplicated().any():

raise ValueError('Duplicate values detected')

- Real-time data processing:

from kafka import KafkaConsumer # Connect to Kafka consumer = KafkaConsumer( 'my_topic', bootstrap_servers=['localhost:9092'], auto_offset_reset='earliest', enable_auto_commit=True, group_id='my_group' ) # Process messages from Kafka for message in consumer: process_message(message)

Note that these are just simple examples, and actual code for these tasks may be much more complex and involved. Data engineering requires a deep understanding of programming concepts and the tools and technologies used in data engineering.

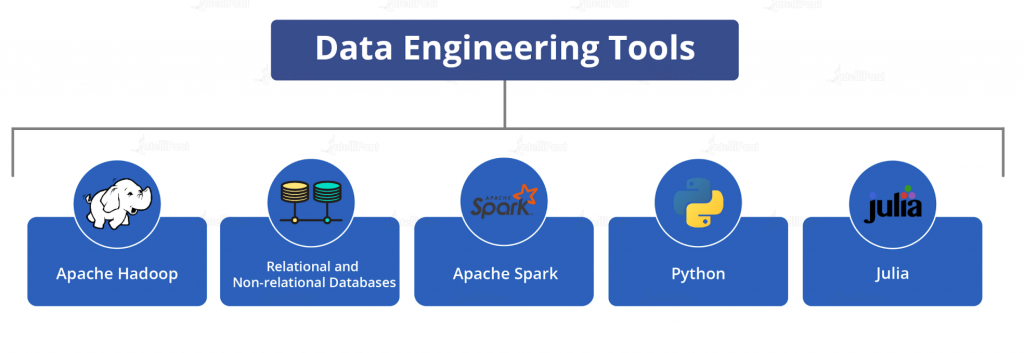

Data Engineering Tools

Data engineering involves working with a variety of tools and technologies to perform different tasks. Some commonly used data engineering tools include:

- ETL tools: ETL (extract, transform, load) tools are used to extract data from different sources, transform it into a suitable format, and load it into a target system. Some popular ETL tools include Talend, Informatica, Apache NiFi, and Microsoft SSIS.

- Big data processing frameworks: Big data processing frameworks are used to process large volumes of data distributed across a cluster of computers. Some popular big data processing frameworks include Apache Hadoop, Apache Spark, and Apache Flink.

- Cloud computing platforms: Cloud computing platforms provide on-demand access to computing resources over the internet. Some popular cloud computing platforms used for data engineering include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

- Database management systems: Database management systems (DBMS) are used to store and manage structured and unstructured data. Some popular DBMS used for data engineering include PostgreSQL, MySQL, Microsoft SQL Server, and Oracle.

- Data warehousing tools: Data warehousing tools are used to store and manage large volumes of structured data. Some popular data warehousing tools include Snowflake, Amazon Redshift, and Google BigQuery.

- Data integration platforms: Data integration platforms provide tools and technologies for integrating data from different sources into a common format. Some popular data integration platforms include Apache Kafka, Apache Nifi, and Google Data Fusion.

- Data modeling tools: Data modeling tools are used to design and visualize data models. Some popular data modeling tools include ER/Studio, Visio, and Lucidchart.

- Workflow management tools: Workflow management tools are used to manage and orchestrate complex data pipelines. Some popular workflow management tools include Apache Airflow, Luigi, and Azkaban.

These are just some examples of the many tools and technologies used in data engineering. The specific tools and technologies used can vary depending on the organization and the nature of the data engineering tasks being performed.

Wrapping up

In summary, data engineering is the process of designing, building, and managing the infrastructure needed to support data storage, processing, and analysis. Data engineers play a critical role in helping organizations turn raw data into actionable insights. They are responsible for tasks such as data acquisition, data transformation, data storage, and data management.

To become a data engineer, one typically needs a strong foundation in computer science, mathematics, and database technologies, along with experience working with various data engineering tools and technologies. Some popular data engineering tools include ETL tools, big data processing frameworks, cloud computing platforms, database management systems, data warehousing tools, data integration platforms, data modeling tools, and workflow management tools. The specific tools and technologies used can vary depending on the organization and the nature of the data engineering tasks being performed.