Data Pipeline

What is a Data Pipeline?

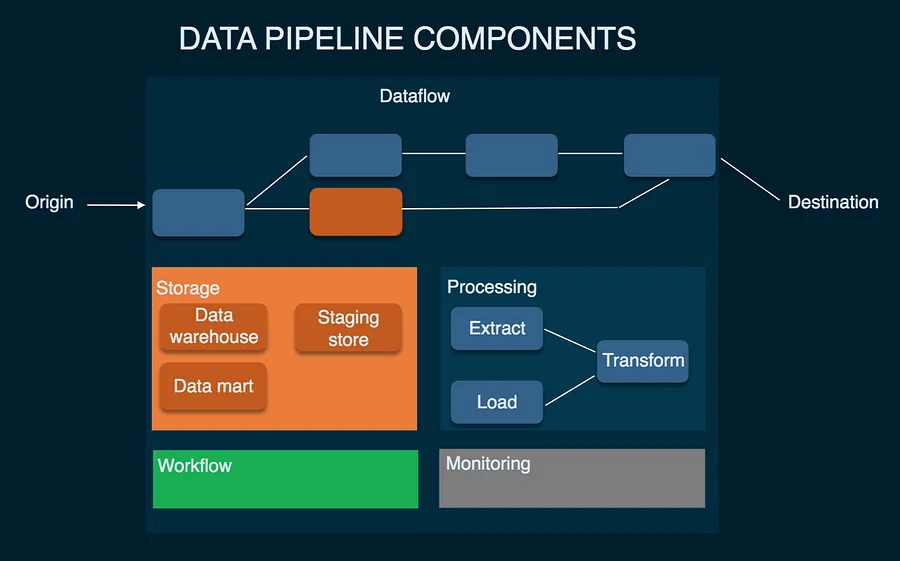

A data pipeline is a series of steps or processes that are used to extract, transform, and load data from various sources to a destination. The purpose of a data pipeline is to automate the flow of data, making it more efficient and reliable.

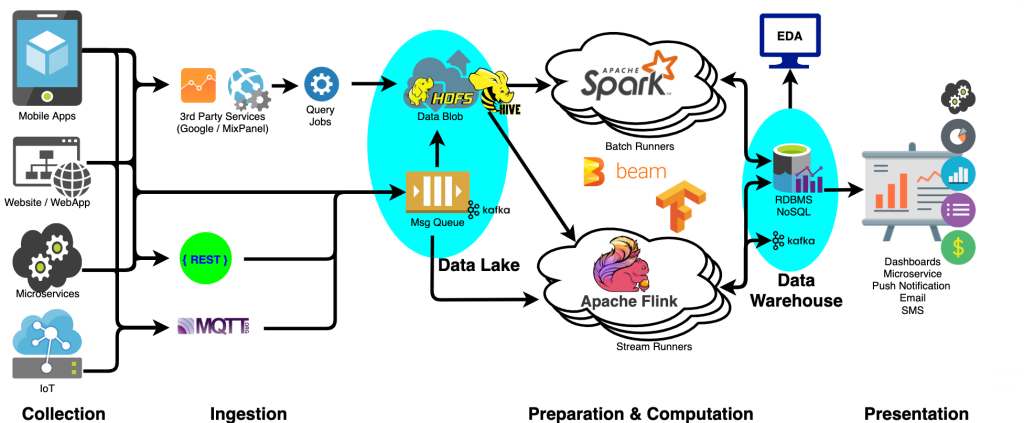

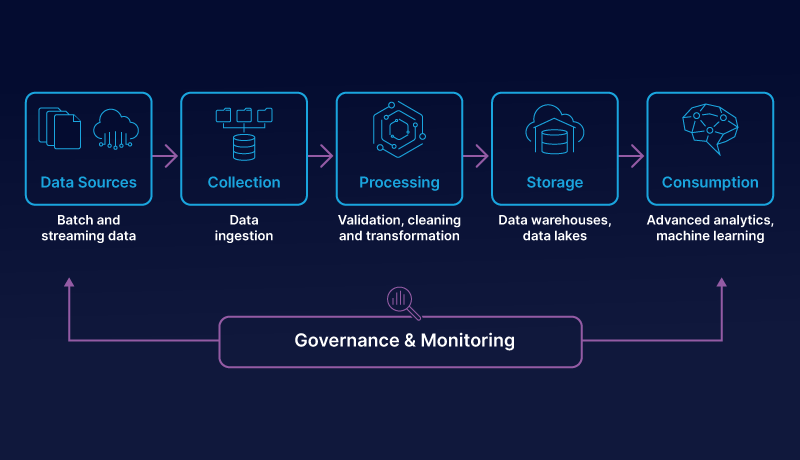

The process of building a data pipeline typically involves several stages, such as:

- Data extraction: Data is extracted from one or more sources, such as databases, flat files, or APIs.

- Data transformation: The extracted data is then transformed to fit the destination’s schema, which includes cleaning, filtering, and formatting the data.

- Data loading: The transformed data is then loaded into the destination, such as a data warehouse, data lake, or cloud storage.

Data pipelines can be used for a variety of purposes, such as:

- Extracting and loading data into a data warehouse for analysis.

- Processing sensor data in real-time from IoT devices.

- Integrating data from multiple sources into a unified view.

- Stream real-time data and process it as it is received.

Data pipelines can be built using a variety of tools and technologies, including open-source and commercial options. Some popular open-source tools include Apache NiFi, Apache Kafka, and Apache Storm. Commercial options such as Talend, Informatica, and Alteryx are also widely used. Data pipelines require monitoring and maintenance to ensure they are running smoothly and meeting the organization’s needs.

Why do we need Data Pipelines?

Data pipelines are critical for organizations because they automate the flow of data, making it more efficient and reliable. There are several reasons why data pipelines are necessary, including:

- Data integration: Data pipelines allow organizations to extract data from multiple sources and integrate it into a unified view. This makes it easier to analyze and gain insights from the data.

- Automation: Data pipelines automate the process of extracting, transforming, and loading data, which eliminates manual errors and reduces the time required to process data.

- Scalability: Data pipelines are designed to handle large volumes of data, which is important as data continues to grow at a rapid pace.

- Data quality: Data pipelines help organizations ensure that their data is accurate, consistent, and of high quality. This is done by handling missing, duplicate, and inconsistent data.

- Performance: Data pipelines are designed to process data quickly and efficiently, which is important for organizations that need real-time access to their data.

- Security: Data pipelines protect data from unauthorized access and help organizations comply with data privacy regulations.

- Cost-effective: Data pipelines are cost-effective solutions for organizations that need to process large amounts of data. They can help organizations save time and money by automating manual processes and reducing the need for specialized resources.

In conclusion, data pipelines are necessary for organizations because they automate the flow of data, making it more efficient and reliable. They help organizations integrate, automate, scale, improve data quality, increase performance and ensure the security of data. Data pipelines are also cost-effective solutions that can help organizations save time and money while gaining valuable insights from their data.

There are several types of data pipelines, including:

- Extract, and transform load (ETL) pipelines: These pipelines extract data from one or more sources, transform it to fit the destination’s schema, and then load it into the destination.

- Extract, load, transform (ELT) pipelines: These pipelines extract data from one or more sources, load it into the destination, and then transform it to fit the destination’s schema.

- Data integration pipelines: These pipelines extract data from one or more sources, and then integrate it into a unified view.

- Data streaming pipelines: These pipelines extract data in real-time, and then process it as it is received.

When building a data pipeline, there are several considerations to keep in mind, including:

- Data quality: The data pipeline should be able to handle missing, duplicate, and inconsistent data.

- Scalability: The data pipeline should be able to handle a large volume of data.

- Performance: The data pipeline should be able to process data quickly and efficiently.

- Security: The data pipeline should be secure, and data should be protected from unauthorized access.

Examples of data pipelines include:

- A pipeline that extracts customer data from a CRM system, transforms it to fit the schema of a data warehouse and then loads it into the data warehouse for analysis.

- A pipeline that extracts sensor data from IoT devices, processes it in real-time and then loads it into a data lake for analysis.

- A pipeline that extracts data from multiple sources, such as social media, website logs, and customer feedback, and then integrates it into a unified view for analysis.

In conclusion, data pipelining is a powerful process that allows organizations to automate the flow of data, making it more efficient and reliable. There are many types of data pipelines, and building a pipeline requires careful consideration of factors such as data quality, scalability, performance, and security. With the right pipeline in place, organizations can gain valuable insights from their data, which can help them make better decisions and improve their operations.

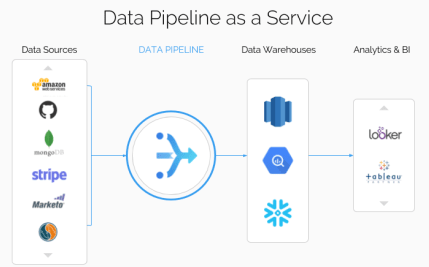

Another important consideration when building a data pipeline is the choice of technology. There are several tools and technologies available for building data pipelines, including open-source and commercial options. Some popular open-source tools include Apache NiFi, Apache Kafka, and Apache Storm. These tools provide a wide range of functionality and can be easily integrated with other open-source technologies.

Commercial options such as Talend, Informatica, and Alteryx are also widely used. These tools often provide a more user-friendly interface and additional functionality such as data quality, data profiling, and data governance. Additionally, they offer support and maintenance which can be essential for organizations that require a high level of reliability and performance.

When building a data pipeline, it’s also important to consider the architecture of the pipeline. A common architecture for data pipelines is the Lambda Architecture, which consists of three layers: the batch layer, the serving layer, and the speed layer. The batch layer stores historical data, the serving layer provides real-time queries and the speed layer provides low-latency access to recent data. This architecture allows for the pipeline to handle both real-time and batch processing and enables the pipeline to handle large volumes of data.

Another important aspect of building a data pipeline is monitoring and maintenance. Data pipelines need to be monitored to ensure that they are running smoothly and to detect and resolve any issues that may arise. This includes monitoring the performance of the pipeline, monitoring the data quality, and monitoring the data lineage. Additionally, regular maintenance is necessary to ensure that the pipeline is up-to-date and that it continues to meet the organization’s needs.

In conclusion, data pipelining is a powerful process that allows organizations to automate the flow of data, making it more efficient and reliable. Building a data pipeline requires careful consideration of factors such as data quality, scalability, performance, security, and technology. Regular monitoring and maintenance are also important to ensure that the pipeline is running smoothly and meeting the organization’s needs. With the right pipeline in place, organizations can gain valuable insights from their data, which can help them make better decisions and improve their operations.

Data Pipeline Architecture:

Data pipeline architecture refers to the design and structure of a data pipeline and how it handles data flow, data processing, and data storage. There are several common architectures for data pipelines, including:

- Linear pipeline Architecture: This is the simplest and most basic architecture, where data flows through a linear sequence of steps, from extraction to loading.

- Lambda Architecture: This architecture is designed to handle both real-time and batch processing and consists of three layers: the batch layer, the serving layer, and the speed layer. The batch layer stores historical data, the serving layer provides real-time queries and the speed layer provides low-latency access to recent data.

- Kappa Architecture: A variation of Lambda architecture, Kappa architecture is designed for real-time data processing and it doesn’t have a batch layer.

- Microservices Architecture: This architecture is based on breaking down a pipeline into small, independent services that can be developed, deployed, and scaled independently.

- Cloud-Native Architecture: This architecture is designed to be deployed in a cloud environment and uses cloud-native technologies such as containers, Kubernetes, and serverless computing.

When designing a data pipeline architecture, it’s important to consider factors such as scalability, performance, security, and cost. The architecture should be able to handle a large volume of data, process data quickly and efficiently, protect data from unauthorized access, and be cost-effective.

In conclusion, data pipeline architecture refers to the design and structure of a data pipeline, and how it handles data flow, data processing, and data storage. There are several common architectures for data pipelines such as linear pipeline, Lambda, Kappa, Microservices, and Cloud-native. Factors such as scalability, performance, security, and cost should be taken into consideration when designing a data pipeline architecture to ensure it meets the organization’s needs.

Another important aspect of building a data pipeline is testing. Data pipelines need to be thoroughly tested to ensure that they are working correctly and delivering accurate results. This includes unit testing, integration testing, and end-to-end testing.

Unit testing is a technique used to test individual components or modules of a pipeline. This type of testing is done by developers and is used to ensure that each component of the pipeline is functioning as expected.

Integration testing is done after unit testing and it’s used to test the interactions between different components of the pipeline. This type of testing is done to ensure that the pipeline as a whole is working correctly and that all components are communicating correctly.

End-to-end testing is the final stage of testing, it’s used to test the pipeline from end to end, including all the data sources and destinations. This type of testing is done to ensure that the pipeline is working correctly and that the data is being correctly extracted, transformed, and loaded.

Another important aspect of building a data pipeline is data governance. Data governance is the process of managing, maintaining, and controlling access to data. This includes creating policies and procedures for data management, data quality, data security, and data privacy. The goal of data governance is to ensure that data is accurate, consistent, and protected from unauthorized access.

When building a data pipeline, it’s also important to consider the cost and resources required. Building and maintaining a data pipeline can be a complex and resource-intensive task. It requires specialized skills and expertise and can be costly in terms of time, money, and resources. Organizations need to carefully consider the cost and resources required before building a data pipeline.

In conclusion, building a data pipeline is a complex process that requires careful planning and execution. It’s important to consider factors such as data quality, scalability, performance, security, technology, testing, data governance, and cost. Regular monitoring, maintenance, and testing are also essential to ensure that the pipeline is running smoothly and delivering accurate results. Organizations can gain valuable insights from their data by building a robust and reliable data pipeline, which can help them make better decisions and improve their operations.

Data Pipeline Tools: An Overview

There are many different tools available for building data pipelines, including open-source and commercial options. Some popular open-source tools include:

- Apache NiFi: A powerful data integration tool that allows for the creation of data flows and the manipulation of data. It’s used to automate the flow of data between systems and is designed to handle large volumes of data.

- Apache Kafka: A distributed streaming platform that is used for building real-time data pipelines and streaming applications. It’s designed to handle high volume, high throughput, and low latency data streams.

- Apache Storm: A distributed real-time computation system that is used for processing streaming data. It’s designed to handle high volumes of data and is used for real-time data processing.

- Apache Beam: A unified programming model for both batch and streaming data processing. It supports multiple runners including Apache Flink, Apache Spark, and Google Cloud Dataflow.

- Airflow: A platform to programmatically author, schedule, and monitor workflows, it was built by Airbnb and it’s widely adopted by organizations to manage their data pipeline workflows.

Commercial options include:

- Talend: A comprehensive data integration platform that includes data integration, data quality, and data governance capabilities.

- Informatica: A data integration and data management platform that includes data integration, data quality, and data governance capabilities.

- Alteryx: A data integration and data analytics platform that includes data integration, data quality, and data analytics capabilities.

- AWS Glue: A fully managed extract, transform, and load (ETL) service that makes it easy for customers to prepare and load their data for analytics.

- Azure Data Factory: A fully managed data integration service that allows you to create, schedule, and orchestrate your ETL and data integration workflows.

When choosing a data pipeline tool, it’s important to consider factors such as scalability, performance, security, and cost. The tool should be able to handle a large volume of data, process data quickly and efficiently, protect data from unauthorized access, and be cost-effective. Additionally, it should be able to handle the specific requirements of the organization and integrate with the organization’s existing technology stack.

Data Pipeline Design and Considerations or How to Build a Data Pipeline?

Designing and building a data pipeline involves several stages and considerations, including:

- Identifying the data sources: The first step in building a data pipeline is to identify the data sources that will be used. This includes determining the type and format of the data, as well as the location and accessibility of the data.

- Defining the data requirements: The next step is to define the data requirements for the pipeline. This includes determining the type of data that will be used, the data format, and the data quality standards that must be met.

- Choosing the right tool: Once the data sources and requirements have been identified, the next step is to choose the right tool for building the pipeline. This includes considering factors such as scalability, performance, security, and cost.

- Designing the pipeline: After the tool has been chosen, the next step is to design the pipeline. This includes determining the architecture of the pipeline, the data flow, and the processing steps that will be used.

- Building the pipeline: With the design in place, the next step is to build the pipeline. This includes implementing the design, developing the code, and testing the pipeline.

- Monitoring and maintenance: Once the pipeline has been built, it’s important to monitor and maintain the pipeline. This includes monitoring the performance of the pipeline, monitoring the data quality, and monitoring the data lineage. Regular maintenance is also necessary to ensure that the pipeline is up-to-date and that it continues to meet the organization’s needs.

Some additional considerations when building a data pipeline include:

- Data Governance: Implementing policies and procedures for data management, data quality, data security, and data privacy.

- Data quality: The pipeline should be able to handle missing, duplicate, and inconsistent data.

- Scalability: The pipeline should be able to handle a large volume of data.

- Performance: The pipeline should be able to process data quickly and efficiently.

- Security: The pipeline should be secure, and data should be protected from unauthorized access.

In conclusion, building a data pipeline involves several stages and considerations. It starts with identifying the data sources and defining the data requirements, choosing the right tool, designing the pipeline, building the pipeline, monitoring, and maintaining it. It’s important to take into account factors such as scalability, performance, security, cost, data governance, and data quality when building a data pipeline to ensure it meets the organization’s needs.

Data Pipeline Examples:

There are many different types of data pipelines, and they can be used for a variety of purposes. Some examples of data pipelines include:

- Extracting customer data from a CRM system, transforming it to fit the schema of a data warehouse, and then loading it into the data warehouse for analysis. This pipeline can be used to gain insights into customer behavior, preferences, and demographics.

- Extracting sensor data from IoT devices, processing it in real time, and then loading it into a data lake for analysis. This pipeline can be used to monitor and analyze sensor data in real time, such as temperature, humidity, and motion.

- Extracting data from multiple sources, such as social media, website logs, and customer feedback, and then integrating it into a unified view for analysis. This pipeline can be used to gain insights into customer sentiment, feedback, and preferences.

- Extracting data from a transactional database, transforming it to fit the schema of a data warehouse, and then loading it into the data warehouse for analysis. This pipeline can be used to gain insights into sales, inventory, and customer purchase patterns.

- Extracting data from log files, transforming it to fit the schema of a data warehouse, and then loading it into the data warehouse for analysis. This pipeline can be used to gain insights into website traffic, user behavior, and system performance.

In conclusion, data pipelines can be used for a variety of purposes, such as extracting and loading data into a data warehouse for analysis, processing sensor data in real-time, integrating data from multiple sources, extracting data from transactional databases, extracting data from log files, and many other use cases. Data pipelines are powerful tools that allow organizations to automate the flow of data, make it more efficient and reliable, and gain valuable insights from their data.