Gradient Boosting Algorithm in Python

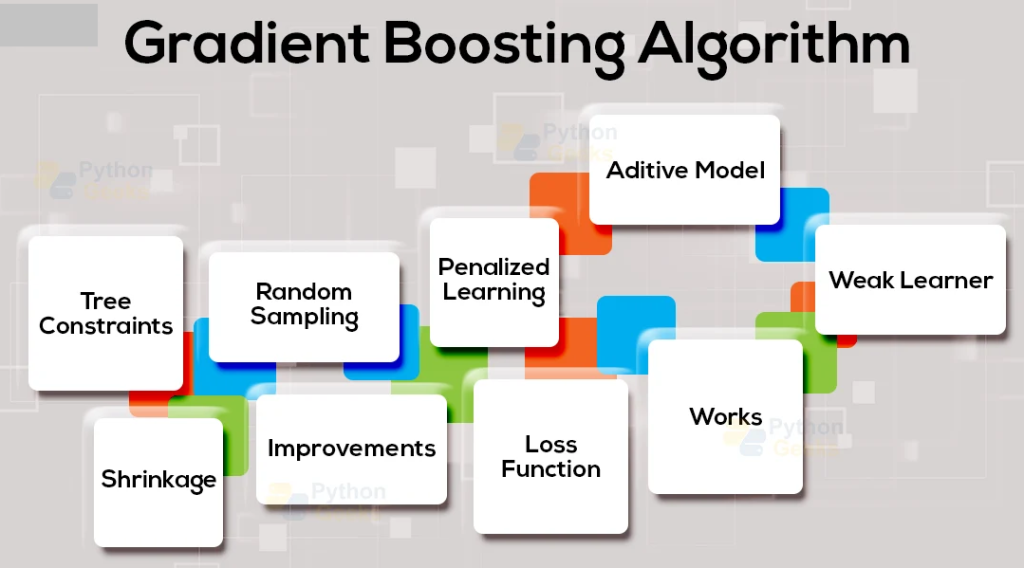

Gradient Boosting is a machine learning technique for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction models, typically decision trees. It builds the model in a stage-wise fashion like other boosting methods do, and it generalize them by allowing optimization of an arbitrary differentiable loss function.

In Python, you can use the scikit-learn library to implement Gradient Boosting.

The following is an example of how to use GradientBoostingRegressor to fit a regression model:

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.datasets import make_regression

X, y = make_regression(n_features=4, n_informative=2, random_state=0)

regr = GradientBoostingRegressor(random_state=0)

regr.fit(X, y)

Similarly, you can use GradientBoostingClassifier for classification problems:

from sklearn.ensemble import GradientBoostingClassifier from sklearn.datasets import make_classification X, y = make_classification(n_features=4, n_informative=2, random_state=0) clf = GradientBoostingClassifier(random_state=0) clf.fit(X, y)

The parameters of the GradientBoostingRegressor and GradientBoostingClassifier such as number of trees, learning rate, depth of the tree etc can be tuned to improve the performance of the model.

You can also refer to the scikit-learn documentation for more information on the usage of the Gradient Boosting algorithm: https://scikit-learn.org/stable/modules/ensemble.html#gradient-tree-boosting

In addition to the scikit-learn library, there are also other popular libraries in Python that provide implementations of Gradient Boosting, such as XGBoost and LightGBM.

XGBoost (eXtreme Gradient Boosting) is an optimized and distributed gradient boosting library designed for performance and efficiency. It is widely used in industry and academia for its advanced features and computational speed.

Here is an example of how to use XGBoost for regression:

import xgboost as xgb X, y = make_regression(n_features=4, n_informative=2, random_state=0) regr = xgb.XGBRegressor() regr.fit(X, y)

Similarly, you can use XGBClassifier for classification problems:

import xgboost as xgb X, y = make_classification(n_features=4, n_informative=2, random_state=0) clf = xgb.XGBClassifier() clf.fit(X, y)

Like scikit-learn, XGBoost also provides several parameters that can be tuned to improve the performance of the model.

LightGBM is another open-source library that provides gradient boosting with decision trees. It is designed to be efficient and scalable, and it is particularly well-suited for large datasets.

Here is an example of how to use LightGBM for regression:

import lightgbm as lgb X, y = make_regression(n_features=4, n_informative=2, random_state=0) regr = lgb.LGBMRegressor() regr.fit(X, y)

Similarly, you can use LGBMClassifier for classification problems:

import lightgbm as lgb X, y = make_classification(n_features=4, n_informative=2, random_state=0) clf = lgb.LGBMClassifier() clf.fit(X, y)

It also provides various parameters that can be tuned to improve the performance of the model.

In summary, Gradient Boosting is a powerful ensemble technique that can be used for both regression and classification problems. The scikit-learn library provides a convenient and easy-to-use implementation of Gradient Boosting, and other libraries such as XGBoost and LightGBM also provide efficient and optimized implementations of the algorithm.

In addition to the libraries I’ve mentioned before, there are also other libraries that provide gradient boosting implementations, such as CatBoost, and H2O.

CatBoost is an open-source library developed by Yandex that provides gradient boosting for decision trees. It can handle categorical features automatically and provides an efficient implementation of the algorithm. Here is an example of how to use CatBoost for regression:

from catboost import CatBoostRegressor X, y = make_regression(n_features=4, n_informative=2, random_state=0) regr = CatBoostRegressor(random_seed=0) regr.fit(X, y)

And for classification:

from catboost import CatBoostClassifier X, y = make_classification(n_features=4, n_informative=2, random_state=0) clf = CatBoostClassifier(random_seed=0) clf.fit(X, y)

H2O is an open-source platform that provides a wide range of machine learning algorithms, including gradient boosting. It is designed for large datasets and can be run on a distributed cluster for increased performance. Here is an example of how to use H2O for gradient boosting:

import h2o from h2o.estimators.gbm import H2OGradientBoostingEstimator h2o.init() X, y = make_regression(n_features=4, n_informative=2, random_state=0) X = h2o.H2OFrame(X) y = h2o.H2OFrame(y) regr = H2OGradientBoostingEstimator() regr.train(x=X.columns, y=y.columns[0], training_frame=X

These libraries also provide a set of parameters that can be used to tune the performance of the model and make it more accurate.

It’s worth noting that when choosing a library for gradient boosting, it’s important to consider the specific requirements of your problem, such as the size of your dataset, the complexity of your model, and the resources available to you. Each library has its own strengths and weaknesses, so it’s a good idea to try out a few different options and compare their performance on your specific task before making a final decision.

Gradient Boosting in Classification.

Gradient Boosting can be used for classification problems in Python using the GradientBoostingClassifier class from the scikit-learn library, or the XGBClassifier and LGBMClassifier classes from the XGBoost and LightGBM libraries respectively.

Here is an example of how to use the GradientBoostingClassifier from scikit-learn for classification:

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.datasets import make_classification

X, y = make_classification(n_features=4, n_informative=2, random_state=0)

clf = GradientBoostingClassifier(random_state=0)

clf.fit(X, y)

Similarly, XGBoost’s XGBClassifier can be used in the following way:

import xgboost as xgb

X, y = make_classification(n_features=4, n_informative=2, random_state=0)

clf = xgb.XGBClassifier()

clf.fit(X, y)

And LightGBM’s LGBMClassifier can be used as follows:

import lightgbm as lgb

X, y = make_classification(n_features=4, n_informative=2, random_state=0)

clf = lgb.LGBMClassifier()

clf.fit(X, y)

It is worth noting that all of these implementations, like in regression, provide several parameters that can be tuned to improve the performance of the model.

CatBoost also provides a version for classification called CatBoostClassifier, for example:

from catboost import CatBoostClassifier

X, y = make_classification(n_features=4, n_informative=2, random_state=0)

clf = CatBoostClassifier(random_seed=0)

clf.fit(X, y)

In addition, H2O also provides a Gradient Boosting classifier called H2OGradientBoostingEstimator

import h2o

from h2o.estimators.gbm import H2OGradientBoostingEstimator

h2o.init()

X, y = make_classification(n_features=4, n_informative=2, random_state=0)

X = h2o.H2OFrame(X)

y = h2o.H2OFrame(y)

clf = H2OGradientBoostingEstimator()

clf.train(x=X.columns, y=y.columns[0], training_frame=X)

In conclusion, Gradient Boosting can be used for classification problems in Python using various libraries such as scikit-learn, XGBoost, LightGBM, CatBoost and H2O. Each library provides a convenient and easy-to-use implementation of the Gradient Boosting algorithm, and all of them provide several parameters that can be tuned to improve the performance of the model.